Software & Robot Hardware Development

This page discusses how an emotion model might look in a robot, then shows the following projects:

- 🤖Humanoid Rover Robot run by Emotion Model

- 💪Servo Motor Setup App for Humanoid Rover

- 🐵Virtual agent run by Cognitive Model (can use tools!!)

Emotion in a Robot

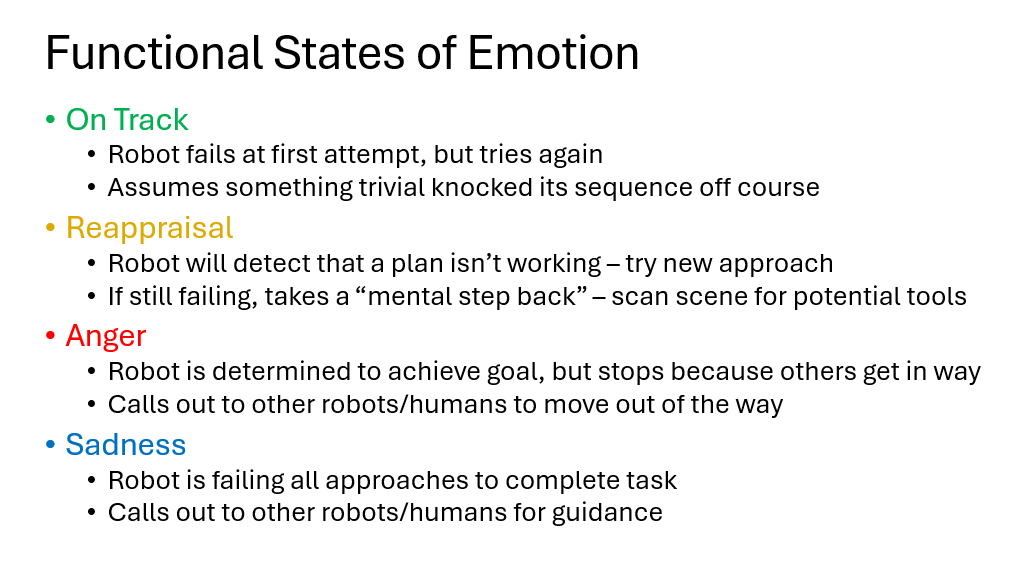

Drawing on research into functional brain systems, I developed a neural network control circuit for flexible robotic behavior. The system drives motivation, tracks goal progress, and adjusts responses when plans fail. These capabilities are implemented through the emotion states listed below.

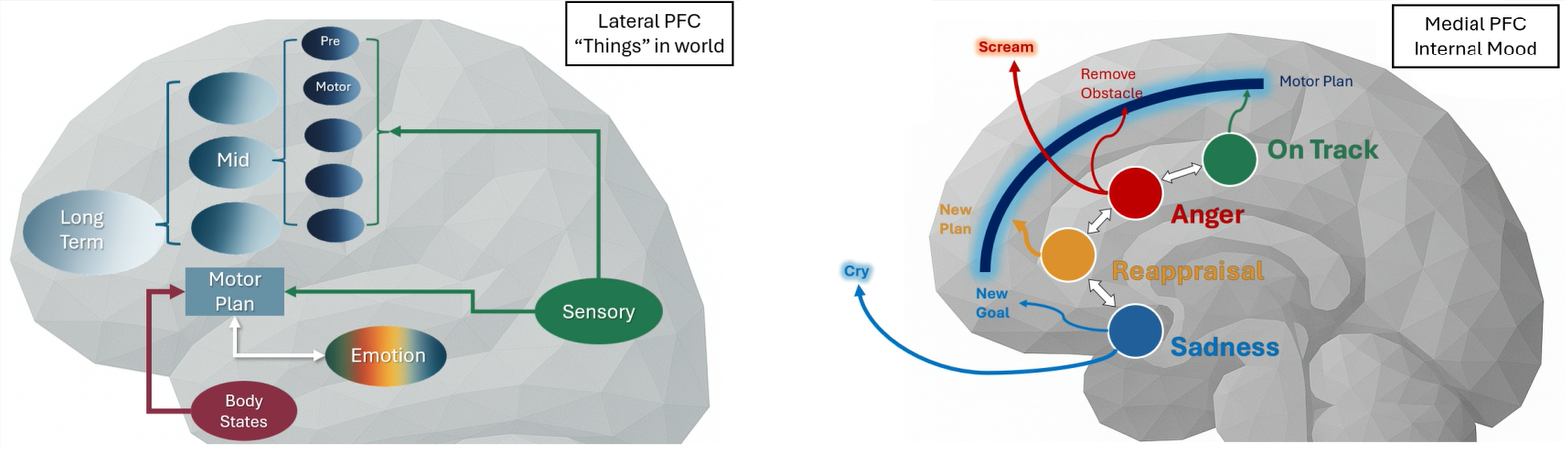

Theoretical Model of Functional Emotion in the Prefrontal Cortex (PFC)

With the functional states listed above, and the best current theories on functional organization in the brain, the Emotion system can be integrated with a Motor Planning system as shown below:

🤖Project: Emotion Model in Humanoid Rover Robot

This video shows the following:

- The "Still-Face" experiment: a popular child psychology study that demonstrates how young children react to unexpected social engagement failures

- How a theoretical model of emotional processing and behavioral reaction can explain the effects observed in the "Still-Face" experiment

- The hardware and software components of the physical robot system used in testing a cognitive model

- How the robot controlled by the Emotion Model reacts to unexpected social engagement failures

💪Project: Servo Motor Setup App for Humanoid Rover

In this video, I walk through the Python UI app I created to help set up the servo motors for the robot. It also shows how I tested the pressure sensors we installed in the robot's gripper pads so he could sense objects and adjust his grip accordingly.

🐵Project: Virtual agent run by Cognitive Model (can use tools!!)

This video shows my early cognitive model that controls an agent in a virtual environment. Equipped with a rudimentary decision-making system, the agent has the following capabilities:

- Associate value with object events

- Form appropriate motor sequences to achieve goals

- React to unexpected outcomes – attend to emphasized cues to aid learning

- React to unplanned obstacles – reappraise the situation to drive new strategies (including tool use!)

- Reading social cues – react to facial expressions in a target individual to keep social engagement on track